Turning a flat photograph into a detailed three dimensional scene has long been a complex, data hungry task. Meta latest research release is aimed at pushing that boundary closer to everyday use by folding 3D understanding into its existing image models. With the launch of Meta SAM 3D tools, the company is extending its Segment Anything Model work to include object, scene and body reconstruction from standard two dimensional images.

Announced as part of the broader SAM and SAM 3 ecosystem, the new components are designed to support use cases across augmented reality, virtual reality, game development and computer vision research, where accurate 3D information is often the missing layer.

What are the Meta SAM 3D tools designed to do

The release spans two primary models. SAM 3D Objects focuses on reconstructing objects and scenes, generating 3D shapes, textures and layouts from a single input image. SAM 3D Body centres on human body estimation, allowing more realistic representations of people to be derived from flat photos.

Both models share a common goal to convert two dimensional images into richer three dimensional data that captures structure and detail. By doing so, they aim to reduce the reliance on large, carefully captured 3D datasets, which are expensive and limited in variety compared with the flood of everyday images available online.

ADVERTISEMENT

Meta is also publishing model checkpoints and inference code so that researchers and developers can test, adapt and build on the work. A new dataset, called SAM 3D artist objects, is planned to pair images with corresponding object meshes, providing a way to evaluate how well the models reconstruct real world forms.

How does SAM 3D connect with Meta broader SAM platform

The new models sit alongside existing tools under the Segment Anything umbrella, including SAM 3, which is described as the company latest model for image and video understanding. A web based interface, SAM, allows users to upload images, select objects or people and then generate 3D reconstructions using the underlying models.

In practical terms, this environment lets users experiment without needing to wire everything into their own pipeline from day one. Developers can try out object selection, segmentation and 3D reconstruction in a single place, then move into custom integration once they understand how the pieces interact.

What should companies know about Meta SAM 3D tools in products

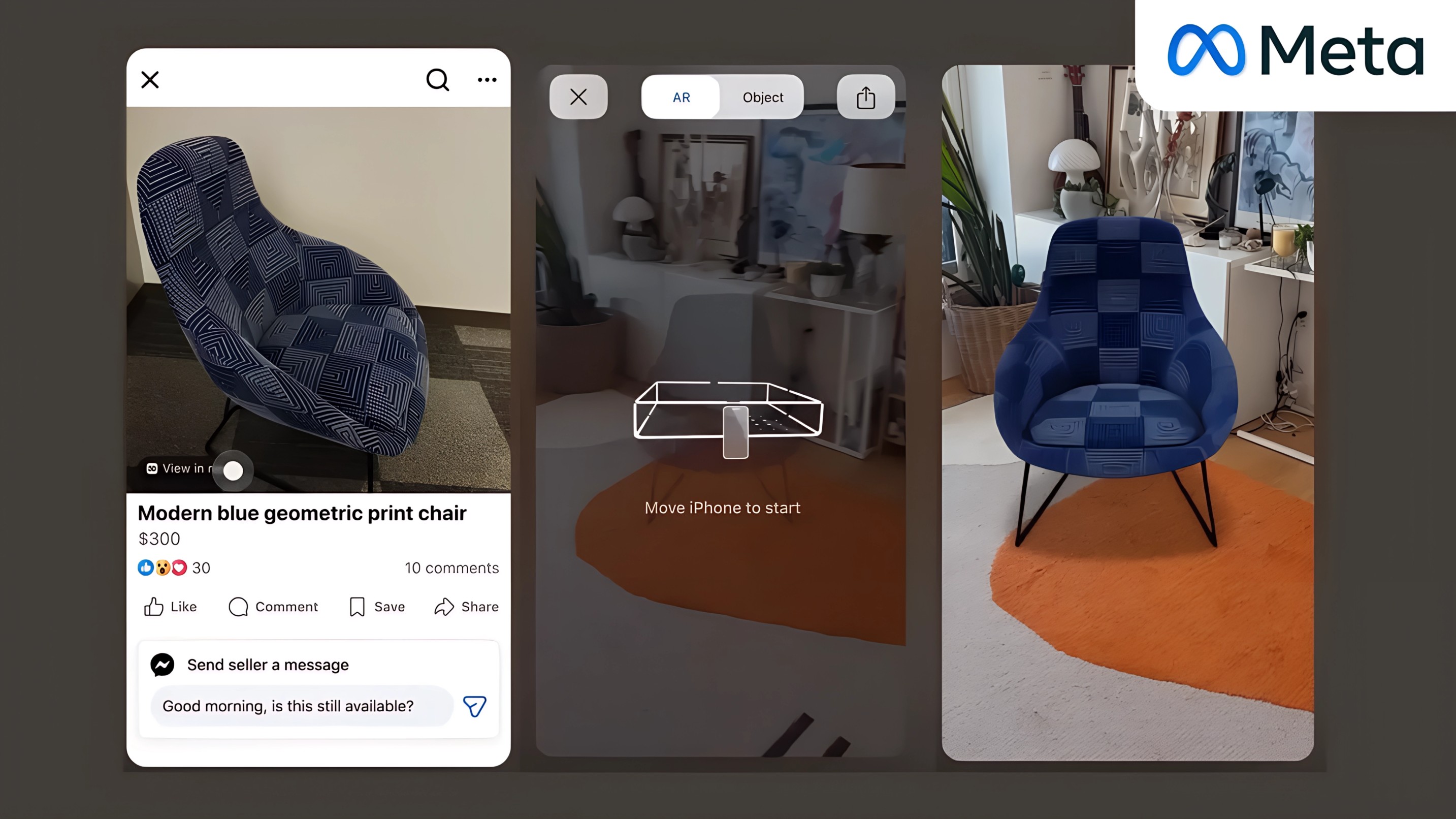

Meta notes that the Meta SAM 3D tools and SAM 3 are already being tested inside a concrete product scenario on Facebook Marketplace. A View in Room feature is in trial, allowing users to preview home decor items in their own spaces by generating suitable 3D representations from available images.

ADVERTISEMENT

The company has not announced a timeline for wider deployment, but the experiment illustrates the kind of commercial use case it is targeting, where better 3D models can improve visualisation for shoppers without requiring manual 3D asset creation at scale.

Addressing limits in real world 3D data

In earlier communications, Meta pointed out that progress in 3D modelling has been constrained by the relatively small amount of real world data available, much of it synthetic or collected in controlled conditions. Everyday photos often involve occlusion, indirect views and varied environments, which make reconstruction harder.

The SAM 3D work is intended to bridge that gap by using powerful image understanding as a foundation for 3D prediction, teaching models to infer depth, volume and structure even when the input is imperfect.

For augmented reality and virtual reality applications, that could mean more convincing overlays and interactions. For game development and computer vision research, it promises faster iteration when building assets or testing algorithms on complex scenes.

By layering three dimensional prediction on top of its existing segmentation and image understanding models, Meta SAM 3D tools aim to make high quality 3D information more accessible to developers and researchers, opening the door to richer AR, VR, gaming and computer vision experiences built from the ordinary images people already capture every day.

ADVERTISEMENT

Follow Marketing Moves on Instagram and Facebook for industry insights, strategy breakdowns, and brand transformation stories.